kzimmermann's take on the 2024 Old Computer Challenge

I'm a little late to the party, but here it is: my take on the Old Computer Challenge 2024!

What started in 2021 by an avid OpenBSD user and developer as a way to challenge our increasingly bloated computer usage has now apparently been rolling out every summer and it's on its third edition by now. And while I did participate in the first edition, somehow I managed to miss out on the previous two (perhaps I was busying myself too much with work?) and in a few ways thought that my first take in 2021 was not completely "true" to the spirit of the actual challenge.

For example, despite having only a single core available to me, I didn't artificially limit the RAM, instead relying on manual control to limit the consumption and terminating processes as needed. In additionk, I felt that I played "defense" too much, burying myself into the terminal and not really exploring how much lightweightness was there even in a graphical environment. So this year, I decided to flip the approach and try the challenge going on a little more raw.

So here's the rules for the 2024 challenge for me:

- Maximize the use of graphical applications. Anyone can run a whole show under 200MB in the terminal with

tmuxand a wide arrangement of CLI or TUI-only programs. Let's see how much of that can be done with graphical applications instead. - Try to keep as much of my traditional workflow (home-based, not actual work) untouched despite using the alternative software described above.

- Because I'm going fully graphical, lock the RAM limit to 700MB via the kernel parameters. I know, it's a little more than the 512MB of the original challenge.

- If it's absolutely impossible to do something in the old computer, don't use a smartphone, use another computer instead.

The last rule about no smartphones is a significant change from my similar previous challenges of no graphical environment. I figured, this time, that if the purpose is to limit my hi-tech, why keep the trend with the smartphone? Nope, I'll try to be as much self-sufficient and PC-based as possible.

Let's see how this progressed.

The Hardware

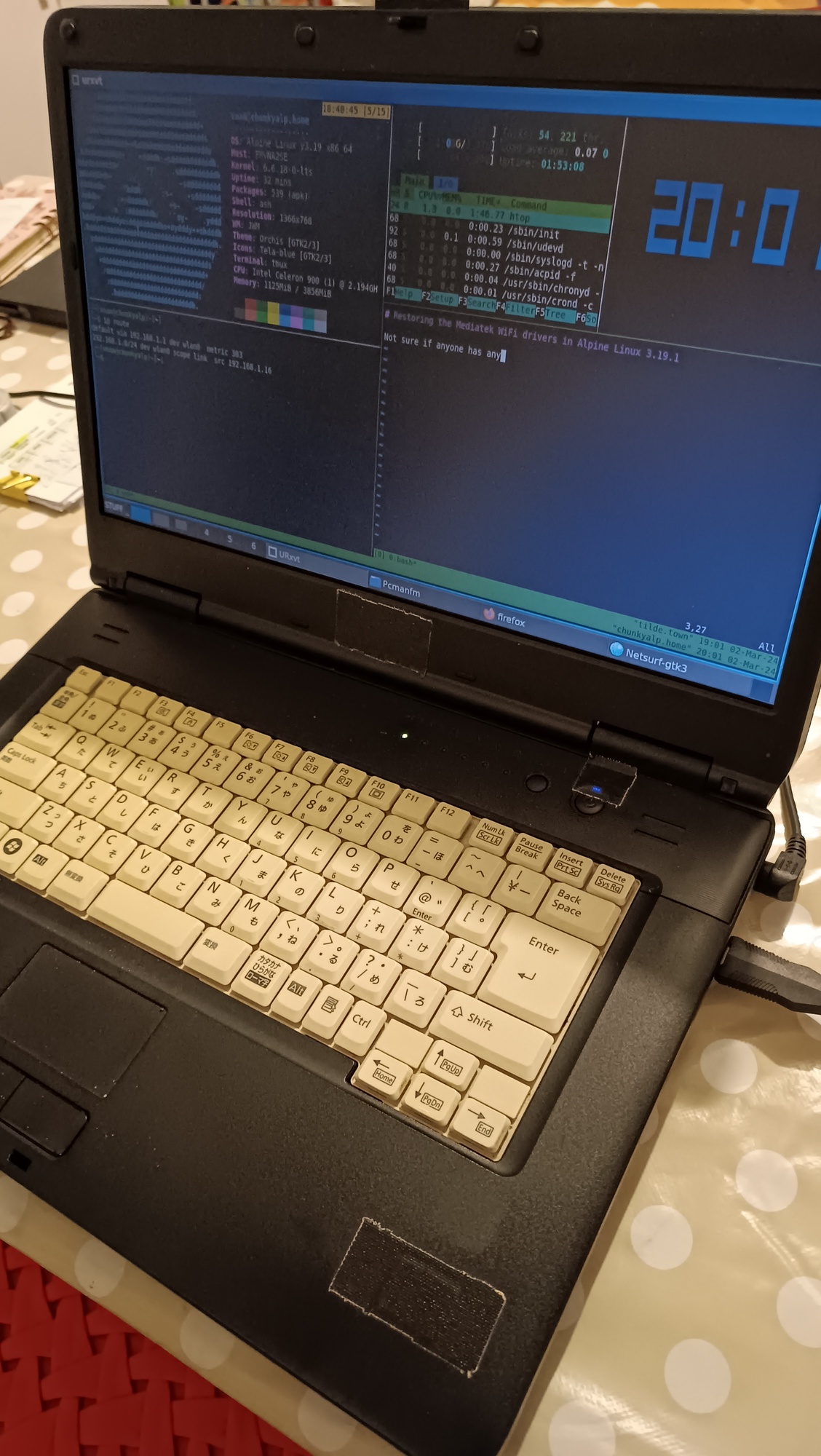

It's probably to nobody's surprise that I'm re-using my old Fujitsu Lifebook that I found while dumpster diving. It isn't as old as many of the stuff that's being using in the challenge out there (being made in 2010), but if you take a look at its specs, you could probably agree that this is indeed a pretty old machine to today's standards - which might be an euphemism to "it has shitty specs."

- CPU: Intel Celeron 900 2.20GHz (a meager single core!)

- RAM: 4GB DDR3

- Storage: 5400 RPM HDD

This laptop is so lacking that it didn't even come with a built-in wifi NIC - in 2010! - so I compensated with a handy 802.11n usb adapter I had. Raw hardware-wise, this is the lowest I've got in the house with me, but still, those specs are still looking too new for an Old Computer Challenge, so I had to lower them artificially somehow, which I'll explain later.

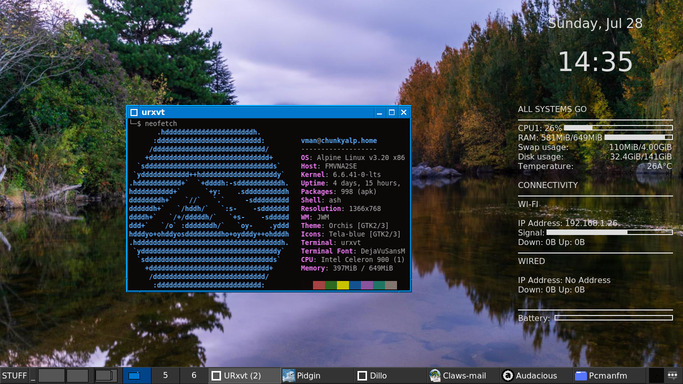

Software starter line

Whereas in 2021 I ran OCC through Debian Buster, this time the extra weight of going maximum GUI meant I had to save up on resources somehow. Trying to pick up the best balance between GUI functionality and the limitations, I whipped out the following software stack:

- OS: Alpine Linux 3.20

- DE / WM: JWM (Joe's Window Manager)

- Browser: dillo web browser

- Mail client: Sylpheed mail

- IRC / IM client: Pidgin

- Audio player: audacious

- Fediverse client: Tut (couldn't find a GUI alternative...)

- RSS: Newsboat (couldn't find a GUI alternative...)

Probably the biggest surprise here was the comeback of dillo, the featherweight graphical browser whose development ceased more than 10 years ago, and yet the community picked up motivation to maintain it again. They released version 3.1.1 in June 8th this year - a leap from the old 3.0.x series of 12 years ago. I was particularly impressed with how it performed!

Lowering the resources artificially

RAM and CPU are still a little to high for my taste, so let's be true to the premises of the challenge and limit them - yes, you can do both in Linux with Bare metal access.

Limiting CPU clock in Linux is really simple. You can use a variant of the trick I used when spending a month on my Raspberry Pi to speed it up with the cpupower command, but this time for the opposite effect: capping the maximum clock speed. So setting something like:

cpupower frequency-set -u 1000000

Will bring back a nice back-to-2003 feeling with a 1GHz max CPU.

For RAM, this was a little more complicated. I knew that there were kernel parameters (also known as "cheat codes") that you could pass to modify the maximum amount of RAM the kernel can use (and thus, the whole OS), but Alpine defaults to not giving out the prompt when booting - going from bootloader to init basically in no time to type them out.

The alternative was to use a RAM disk, but it seemed silly to create a 3.5GB garbage file to populate a ramdisk just for this challenge, so I had to tweak a little bit on the boot settings. Alpine uses syslinux as the bootloader by default, and its configuration files are stored under /boot/extlinux.conf. Editing two lines from that file enabled to get the nice boot: prompt to pass parameters:

PROMPT 1

TIMEOUT 500

PROMPT set to 1 is what enables you to get the parameters' prompt. However, by itself, it would still not work because the whole bootloader timed out in a blink of an eye. This is because TIMEOUT was set to 10 - and those are not seconds, but rather tenths of seconds. Giving us 5 seconds to react and type something in was more than enough. Now we can pass the following parameters to the boot: prompt we see after rebooting:

boot: lts mem=700M

lts is the actual kernel we're going to boot. I was a little surprised that the kernel isn't named linux here, or not even linux-lts as it's named in the package, but that combination worked in the end. And don't forget the M after the number - otherwise, you instruct the kernel to use only 700 bytes of memory,

And now with a low-resource system in hand, it's time to run the challenge properly!

Keeping track of RAM usage - a handy script

Sure you can use things like top or htop to monitor your overall resource consumption, but sometimes, getting an accurate read of each process' own memory usage to find the "resource hogs" out there or even compare two similar processes is useful. This is where I deployed this handy script that gives me that information, but tailored to a specific process:

#!/bin/sh

#

# mem:

# finding the memory used by a process. This should give some pretty good idea.

# pass $1 as the process name, like:

# $ mem dillo

#

# Credit where it's due: https://stackoverflow.com/a/20277787 with some

# modifications added by kzimmermann.

#

if [ -z "$1" ]

then

echo "Please provide a process to find out how much memory it's using"

exit 1

fi

ps -eo rss,pid,euser,args:100 --sort %mem |

grep -v grep | grep -i $@ |

awk '{printf $1/1024 "MB"; $1=""; print }' |

grep -v "$(which mem)" || echo "Process $1 not found"

Save this as mem somewhere in your executable path. Note that for this to work under Alpine (where ps is by default provided as a busybox utility), you must explicitly install the procps-ng package. Works out of the box in other Linux distributions, but sadly not in FreeBSD.

The experiment

A cold boot into the login prompt consumes about 60 MB RAM. We could live in the command-line forever and never go past 200, but this is not the point.

The graphical session with basic applications open (browser, mail client, messenger, file manager, etc) idled at around 350-400 MB depending on the use. And this more or less remained true until I deepened the test a little bit with some additional stress in the system.

Generic Browsing and surfing

Opening a lot of tabs (10~15) in Dillo didn't stress it too much at first because of its incredible efficiency with resources, and everything loaded very quickly. However, one thing that became apparent after a prolonged session of use was the weight of the work of Dillo's caching mechanism. It helpfully caches every single image it loads in an attempt to make reloading the page or other pages of the same site faster for you. It keeps this cache in RAM, which makes the reloading them extremely fast, but apparently it has also no limit (in time or space).

This makes the browser eat up progressively more RAM as you surf for a long time, which, at a cap of 700MB, starts to weigh down and swap in the long run. The only way to free up this cache apparently is to re-open the browser, which is a small break from the workflow. Pity, it would be great if you could limit the cache dillo uses via dillorc or something. With today's much faster internet, reloading images is not so much a problem anymore and you'd save a lot more RAM.

Emails

Emails with Sylpheed are a breeze. The setting up of accounts is done via a graphical wizard and is pretty straightforward - even offering a predefined setup way for Gmail for those who use it. It even has (I guess, native?) PGP support now. Having a GUI for everything feels a little weird for me, but I can't say that it's unusable or worse. Writing and reading emails were a breeze, as quick as mutt.

There was only one problem - Yahoo mail. I know, big tech, etc. But I have a backup address there which, sadly, sometimes is the only one that works. It might be that some providers flag my other addresses as spam, others don't receive the mail at all, some don't send the email to other providers, and all in all, sometimes it's the only provider that works. I mean, my choice was either that or a Gmail / Hotmail account ¯\_(ツ)_/¯.

At any rate, Sylpheed does not show my Inbox for Yahoo mail - at all. It shows mails from every other box, including the ones flagged as spam, but for the Inbox I get nothing. Zip. And it seems that other people have some other problems with Yahoo as well.

I pondered between using claws-mail, a known Sylpheed fork with arguably more modern features, but comes at a palpable bloat. Just for comparison, claws-mail with its full dependency list takes 122 MB of disk space when installed. Sylpheed on the other hand takes about 2300kB. That's kilobytes, my friend. However, claws-mail indeed seems to honor the message: it supports several more authentication mechanisms for email, including OAuth2 that Yahoo Mail seems to require. But RAM-wise, the difference also shows: at top usage, everything running, claws-mail goes about 71MB, vs 37MB of Sylpheed for the same tasks.

$ mem claws-mail

71.2578MB 4725 kzimmermann claws-mail

$ mem sylpheed

37.8828MB 2735 kzimmermann sylpheed

Now claws-mail was all fine and dandy in reading my emails from the Yahoo Inbox, until I decided to reply to one of them. This quite draconian error message prevents me from sending emails with claws-mail, apparently:

SMTP< 554 Email rejected

Come to think of it, perhaps I should ditch Yahoo instead of changing email clients.Though mutt never complained!

Office-like work

For the Office work part, I decided to really stretch it. I opened straight up LibreOffice instead of the often lighter alternatives of gnumeric or Abiword just to see how far could I stretch it. Turns out it wasn't a problem at all - LibreOffice loaded quite alright (at expense of some swapping), but it worked quite well to edit some spreadsheets and write some documents. There was some delay and considerable latency due to the swapping going on between the applications, but it was incredibly usable.

Editing moderately complex spreadsheets was a breeze and I even had to write a snail-mail letter to formalize something. No problem at all with writing, formatting and printing it with LibreOffice Writer.

If this was a pleasant surprise and didn't bother much the experience, however, the next step would undo everything.

Communication and news

I did a double-take and made both IRC and XMPP work under the swiss-army knife of Pidgin, which I'll say was an extremely nostalgic motion, given that it was what I had used way back when I started using Linux. I didn't have OMEMO encryption, but since I host the server in my premises with my own hardware, didn't think the risk was big anyway. Libpurple's IRC support is also excellent, and allowed me to even browse a bit of DCC channels and find a few things.

For my RSS, I could not find any graphical alternatives in Alpine so used my usual Newsboat. For some reason Liferea was not installing due to a dependency error. Maybe next time?

Dealbreaker: running a modern browser

Firefox had been sitting there in the corner the whole time of this party and I was really not feeling like ruining my own lightweight computing party. But the goals of the challenge had been clear from the start - stretch out and see the limits that the minimum computing could take even with graphical applications - so I eventually broke the seal and put it to work. I had another reason, though. I had to to check something in the bank, and this might as well give it enough of a reason to go.

It didn't last long anyway; running Firefox at all turned out to be an impossible task. And I mean literally, because it didn't even open a single page. Firefox opened the blank tab window and when I opted for Wikipedia, it froze. At this point, RAM usage shot through the roof, and the swapped ammount was going up fast. 200MB, then 300, 500 and then 700MB and Firefox still frozen still. It got stuck like this for about five minutes with no progress at all and then I aborted the attempt. I killed Firefox from the shell, watched the swap get freed a little, and finally, true to the "no-smartphone" constraint, went to my other computer to get to my bank.

So, as for workarounds? Borrowing a line from my previous OCC, mpv $YT_URL to watch videos from YouTube (provided yt-dlp is installed) is a great and lightweight way. I watched fullscreen videos with no problem, even accellerating the play. If lag was big, I passed the format=18 flag to the command and the quality was just enough. And for music I just downloaded the files through this method and played them in the Audacious media player.

Lessons learned and conclusion

And today we come a full close of a week since the challenge technically "expired" but I decided to try it anyway. In some ways I'm so glad that I dove in it head on and really added the realism bit by lowering the resources even more.

While for some people this might have represented a "back to 1999" experience, for me the Old Computer Challenge 2024 brought me back to 2011 specifically, about a year since I had started using Linux. At that point, I had become sort of familiar with Linux and knew my around it, but had not been delving into the command line yet like I do today, so most of the stuff I did still had to be graphical, but lightweight. This is why some of the software I used was so familiar and nostalgic to me, like Pidgin, Sylpheed, Audacious and the mighty Dillo that made a comeback.

I concluded from this experiment that it's still possible in 2024 to do most of internet and computer-related tasks from a modest specs computer with sub-GB RAM and faltering CPU - as long as you stay away from Big Tech's. It's heartwarmingly nice to know this; it means that all computing that I personally like to do will still be doable in the future - and probably a long one, while we're at it.

The greatest part is: it doesn't have to be restricted to geeky terminal tools! Graphical interface programs don't have to be bloated in order to be useful and intuitive; if you just find the right alternative, you can find the right balance and get things done effectively.

And finally, make no mistake: all of this is only possible because of the miracle of Free Software. Software built democratically, with efficiency and freedom in mind is the only way that hardware otherwise "EOL'd" almost a decade ago can still thrive, in spite of what the greedy capitalists with increasingly bloated software might say. Creative people, with the power to adapt, modify and create software as needed, can keep maintaining software suitable for every computing platform out there big or small.

However, I think I will be rolling back this computer into its full resources for now ;).

Did you take up on the Old Computer Challenge 2024? How did it go? Let me know on Mastodon!

This post is number #57 of my #100DaysToOffload project.

Last updated on 07/28/24