kzimmermann's take on the Old Computer Challenge!

Somewhat late to the party, but it looks like a few weeks ago Solène Rapenne started what's been called the Old Computer Challenge, in which you were to survive for one week using the lowest possible computing resources in your reach.

In my understanding, the goal was less of "who can revive the oldest computer ever," but more of a "how much can you do with a limited set of hardware" in sort of a sprint-vs-marathon thing. Originally, it was set within a specific time period (July 10th and 17th) and included a set of rules that composed the challenge, quoted from her website:

- 1 CPU maximum, whatever the model. This mean only 1 CPU / Core / Thread. Some bios allow to disable multi core.

- 512 MB of memory (if you have more it's not a big deal, if you want to reduce your ram create a tmpfs and put a big file in it)

- using USB dongles is allowed (storage, wifi, Bluetooth whatever)

- only for your personal computer, during work time use your usual stuff

- relying on services hosted remotely is allowed (VNC, file sharing, whatever help you)

- using a smartphone to replace your computer may work, please share if you move habits to your smartphone during the challenge

And perhaps the more important point (emphasis mine):

- if you absolutely need your regular computer for something really important please use it. The goal is to have fun but not make your week a nightmare

I actually had caught wind of this challenge back when it was announced, but sort of forgot it as the days went by, and did not realize it had happened until I found the hashtag floating around in Mastodon a few days ago. Bummer, I'm too late! Or so I thought?

Do I qualify?

Coincidentally, I may have indirectly participated in the challenge without noticing since just around the same time, I found a ten year-old computer in the trash and put it to good use. Because I wasn't focusing in making the challenge, I may have "cheated" a few times because the one factor I wasn't paying attention was the RAM usage. This laptop has 4GB RAM (probably added beyond the original specs) and at times I did try to limit the usage to keep it under 1 GB. This time, I'm aware of the limitations and will go more strict with it: 512 MB RAM usage or some program gets killed (manually, but still).

I may have some experience regarding the "suffering" of this challenge since previously my other personal challenge got me using only the command-line for a full week. However, I also feel that, even though the tools used might be similar, this is a challenge with a different goal than sticking to the command-line. I believe the more relevant questions that can be answered are:

- Are there any modern graphical applications that still run lightweight enough in 2021?

- Can you still browse the web in a functional and modern way without consuming a Gigabyte of RAM?

- Are there any window managers that can truly be efficient and not take a chunk of the RAM, but still look pleasing?

- If you had nothing but an old machine you found in the trash, could you still be technologically in line with the current environment?

To answer that, let's see the software side.

The software starter line

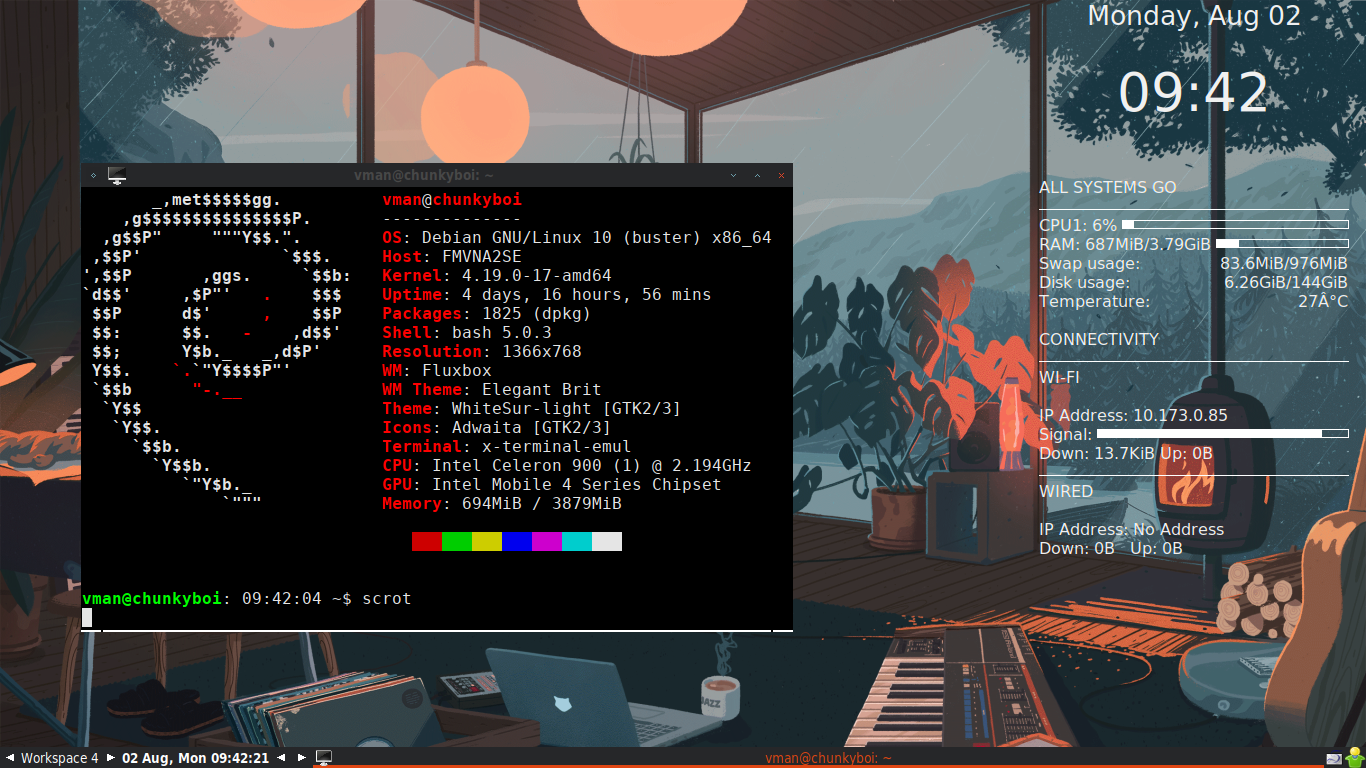

Having interesting hardware to begin is just half of the equation. Let's see the software side of things. I'm using:

- Debian Buster as the OS.

- Fluxbox as the window manager

netsurf-gtkas the browser, with a fallback todilloif it becomes "too heavy"- An assorted collection of minimalist applications and the CLI to gluing them together.

Yes, my choice of OS is quite boring given the wild variety of distros being chosen by other people for the task (Slackware, Void, OpenBSD), and I know that I could've used Alpine Linux for an even more lightweight environment.

However, it was the easiest platform to compile and install the drivers for my modern 802.11ac wifi dongle. Using Debian here also presents another interesting spin: can we fit everything within the RAM requirements despite systemd?

Let's see how some of my common tasks have been faring.

The tasks

Previously, I had been working on this machine as almost the same as my work machine, really pushing the limits on what it could do with all that I had in my hands. With the artificial limits being imposed, however, we have to be more conscious. How much am I going to accomplish?

Communication

I am lucky enough that my closest people have adopted XMPP+OMEMO as their standard method of communications with me. This is one of the ways that I consider right when doing internet communications, and can easily covered in a Linux desktop in a variety of ways.

For pure text messaging, I love to use the profanity terminal XMPP client, especially as it has started to support OMEMO encryption in more recent versions. Alas, the version packaged for Buster (0.6.0) does not support it, and trying to build the newest from source didn't work either due to the version of libc being linked to. There is alternatively a similar-looking messenger called poezio written in Python that also supports OMEMO, but the installation here fails as well, probably due to similar reasons as profanity.

I end up settling with gajim, which is a graphical client. More heavyweight than the former ones, but it works, and I can view the pictures with it. I can still compensate with choosing other lighter apps to fit under 512MB.

I'm also using irssi for IRC, though I don't chat there very often.

Browsing and internet

Browsers are the first and foremost challenge when it comes to lightweightness.

One one hand you want them to be your "second operating system" and be able to do/support every new thing that the W3C tosses at any cost, but on the other, you just want to read an interesting document with a few images here and there. Finding the balance between these is the key.

My first choice had always been dillo when it came to featherweight web browsers, but more recently, I discovered netsurf, which greatly impressed me.

Whereas dillo faltered and messed up some web pages with its CSS support, netsurf surprisingly rendered them quite well, and not at a large resource penalty: it takes about 150MB for a full browsing session. Plus, I could always shut it down and restart it later when needed since it starts up quite quickly.

The problem? Again, the netsurf package is no longer in the Debian repositories, having been removed around the Stretch era for some reason. Bummer, I thought, but thankfully, the build process from source was quite easy. A detailed guide containing build instructions accompanies the source code, and in fact, a quick setup script named env.sh makes installing dependencies very easy.

During the build process, however, I had no choice but go over the challenge's RAM limits. The usage climbed to 2GB during compilation, not sure how well would that have been if I only had 512MB.

If I have to run the browser parallel to another hungry resource, however, I'm quite happy to find the backup dillo browser, or even go full CLI with elinks. As long as the webpage is properly written, both browsers can display the content.

For the email, my usual choice of clients even in resource abundant systems is already pretty lightweight (mutt and sylpheed) so there was no difference there. I could not imagine using something like thunderbird in the 512 world, though.

The challenge here is Mastodon: how can I use it without firing up a large browser? Turns out that there's a command-line client for it called toot written in Python, that you can install via pip. It's great for reading and posting toots, but it doesn't have a good way to catch up on notifications within the client itself (available via toot notifications only). That's something that is still missing, but at least allows me to catch up with stuff. RAM usage: about 37MB.

Media

Browsing YouTube or another large source of videos directly is clearly out of the question. The alternative? MPV with good ol' youtube-dl.

Debian's own version of youtube-dl (auto-installed with MPV) certainly sucks, which is why I always download it straight from the yt-dl website, and simply keep it up to date via youtube-dl -U every now and then. The results are quite impressive given the browserless stack, and I can even write a script for it:

#!/bin/bash

# Watch YouTube without a browser, lightweight mode

mpv --keep-open --ytdl-format=18 "$1"

Then call this via yt some_url or something from the Command Prompt (Alt+F2).

For my local music collection, I could've gone the uber minimalist way and done something like:

mpv --shuffle --no-video *

Which would allow me to control the thing in the terminal with the < and > keys, but I thought it would be better to just avoid the thing altogether and go with a more sophisticated solution: MOC. Command-line, detachable, client-server, it's simply the best solution that I got for a lightweight music player. RAM usage sits at about 15MB with it playing.

Managing documents

This is the other biggie right after web browsing. Everyone talks about browser wars, yet an equally big one passes almost unnoticed: office suite. Granted, outside of work I almost never ever deal with word documents or spreadsheets, so I don't pay nearly as much attention to it than I do to the browsing. However, now that we have a target of maximum RAM consumption, can we do this?

Gnumeric and Abiword are the lightweight players in comparison to LibreOffice. They have their own styling and usability issues themselves, but I think that for most of cases are functional enough to read and edit most MS Office documents. However, like everything in a 512MB system, their lightweightness may be relative: Gnumeric consumed 72MB of RAM opening a basic spreadsheet. I don't know another graphical application any more lightweight than this. Killing other tasks to make way for the document can work too, though, as it starts up quite nicely.

And when it comes to PDF viewing, I think nothing really beats the simplicity of MuPDF. Quick to start, minimal on resources and always usable, it feels almost like a CLI application due to the extensive keybindings that can control almost everything in it.

Findings and conclusion

Here's finally the part that everybody wants to get to read at last: did I get some sort of big epiphany moment, some enlightenment that I don't actually need so many computing resources? Did it spark some revolution inside me that made me throw away my newer, super powerful machines and dig back my oldies as my production environments?

Sadly, no. One thing that I learned was that an Operating System, no matter how light and fast, will never make a slow computer run faster. However, there were indeed some interesting insights that I learned from this interesting experience:

- Swapping is alright: ever since buying more RAM became something affordable, and a much better option to buying a new computer, I came to dislike the very concept of using the Swap space. "Eww, my computer is using 50MB of Swap, what's wrong with it?" Living in a more modest environment showed me that swapping is OK, not a nuisance, and that not every computing case requires 4GB RAM to work. In fact, today I'd say you can do a lot more because of swapping.

- You can close programs, too: much like the previous point, if you are running short of memory or resources, it's OK to close programs not being used! We tend to open a default set of startup programs as a knee-jerk reaction, but don't even get to use them during most of the time. One example: your mail client. Seriously, do you even spend even half an hour a day in total interacting with it? If you need to open a program but don't have enough memory, it's OK to close another one and re-open later.

- Computing with focus is more efficient: ever since tabbed browsing was invented, we have developed a habit for middle-clicking everything that we thought interesting to "read back later," only to realize after a few hours that 30+ tabs have emerged and you have no idea why. Not having resources to spare taught me to be more focused, and do every task I have in my computer with a purpose. You can achieve the same results, but with less resources.

- There's beauty in small: be it the speed and nimbleness with which applications perform, the cleverness of having everything glued together with a terminal multiplexer, small applications are beautiful. Like I stated, they won't make a slow CPU fast or anything like that, but the fact that they allow things to be done in spite of low resources is empowering. And that's a goal that everyone should strive for.

So there you have it. I may not have done the challenge completely by the original standards, but the experience was similar, even if artifically emulated. Who knows, next time I might try it with my Raspberry Pi B with Alpine Linux on sysmode!

Have you tried the Old Computer Challenge by Solène, or a similar one before? How did you fare and what did you learn? Let me know on Mastodon!

Edit: since so many people asked, here's the wallpaper I'm using in the screenshot above. It's a wallpaper I found while randomly browsing Reddit.

This post is number #24 of my #100DaysToOffload project. Follow my progress through Mastodon!

Last updated on 08/02/21